Table shards

What are table shards in ClickHouse?

This topic doesn’t apply to ClickHouse Cloud, where Parallel Replicas serve the same purpose.

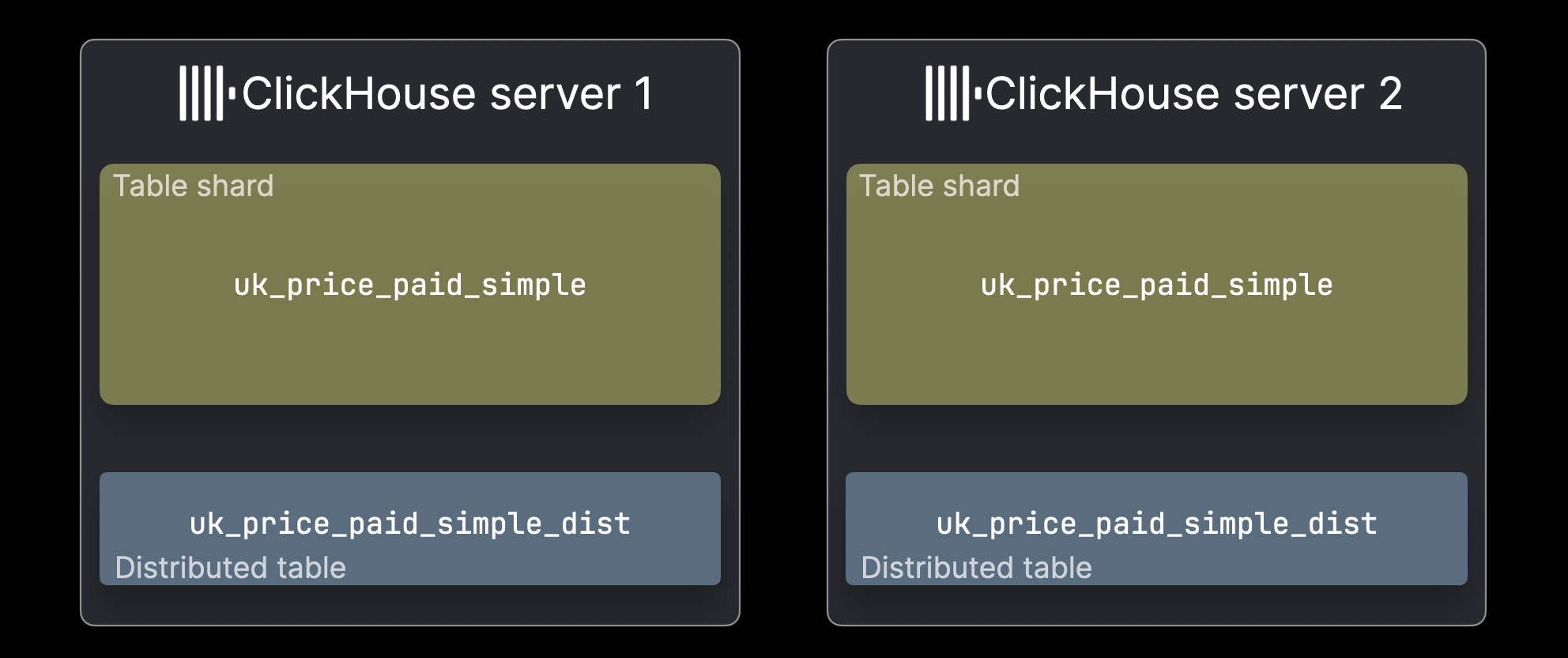

In ClickHouse OSS, sharding is used when ① the data is too large for a single server or ② a single server is too slow for processing. The next figure illustrates case ①, where the uk_price_paid_simple table exceeds a single machine’s capacity:

In such a case the data can be split over multiple ClickHouse servers in the form of table shards:

Each shard holds a subset of the data and functions as a regular ClickHouse table that can be queried independently. However, queries will only process that subset, which may be valid depending on data distribution. Typically, a distributed table (often per server) provides a unified view of the full dataset. It doesn’t store data itself but forwards SELECT queries to all shards, assembles the results, and routes INSERTS to distribute data evenly.

Distributed table creation

To illustrate SELECT query forwarding and INSERT routing, we consider the What are table parts example table split across two shards on two ClickHouse servers. First, we show the DDL statement for creating a corresponding Distributed table for this setup:

The ON CLUSTER clause makes the DDL statement a distributed DDL statement, instructing ClickHouse to create the table on all servers listed in the test_cluster cluster definition. Distributed DDL requires an additional Keeper component in the cluster architecture.

For the distributed engine parameters, we specify the cluster name (test_cluster), the database name (uk) for the sharded target table, the sharded target table's name (uk_price_paid_simple), and the sharding key for INSERT routing. In this example, we use the rand function to randomly assign rows to shards. However, any expression—even complex ones—can be used as a sharding key, depending on the use case. The next section illustrates how INSERT routing works.

INSERT routing

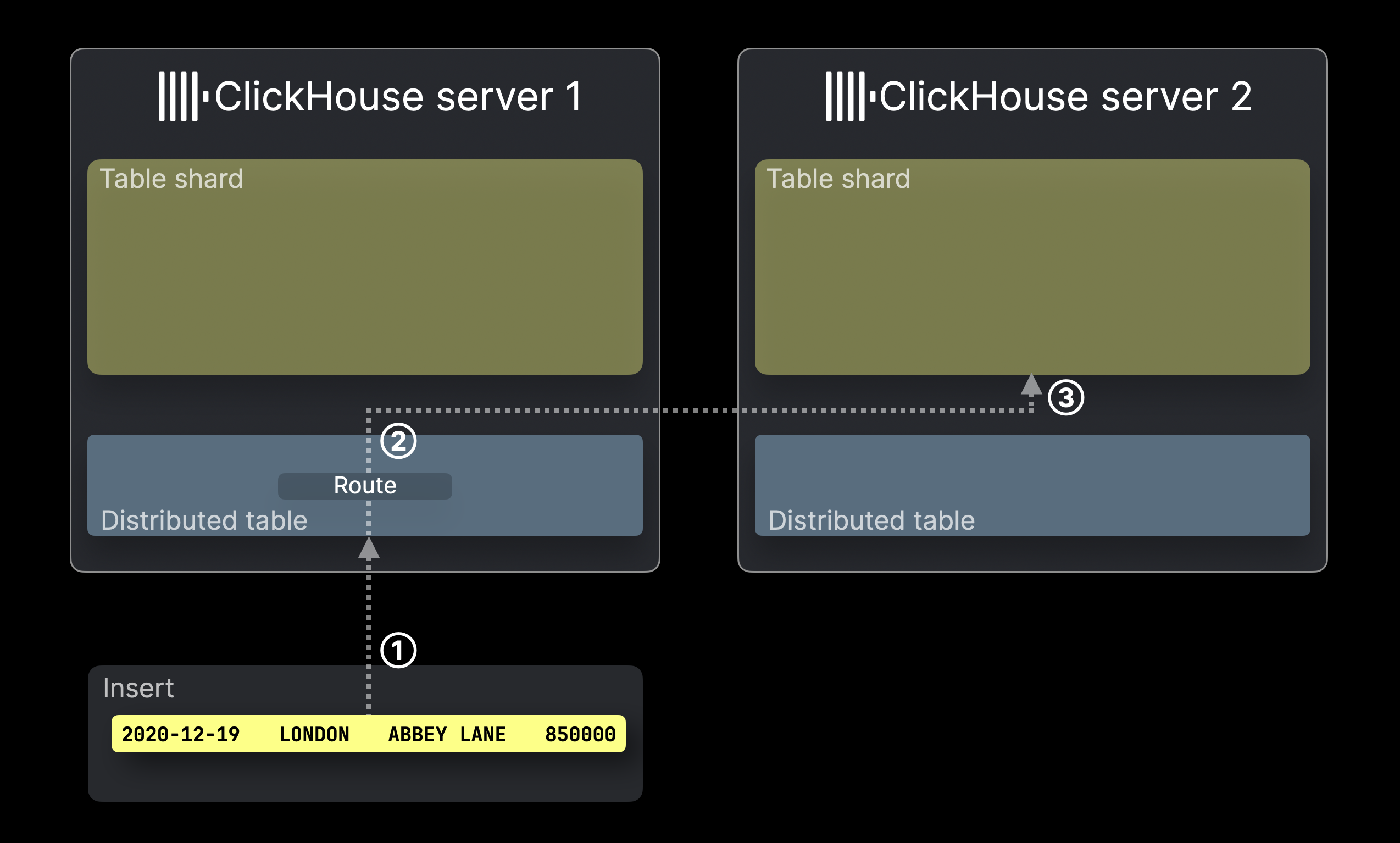

The diagram below illustrates how INSERTs into a distributed table are processed in ClickHouse:

① An INSERT (with a single row) targeting the distributed table is sent to a ClickHouse server hosting the table, either directly or via a load balancer.

② For each row from the INSERT (just one in our example), ClickHouse evaluates the sharding key (here, rand()), takes the result modulo the number of shard servers, and uses that as the target server ID (IDs start from 0 and increment by 1). The row is then forwarded and ③ inserted into the corresponding server's table shard.

The next section explains how SELECT forwarding works.

SELECT forwarding

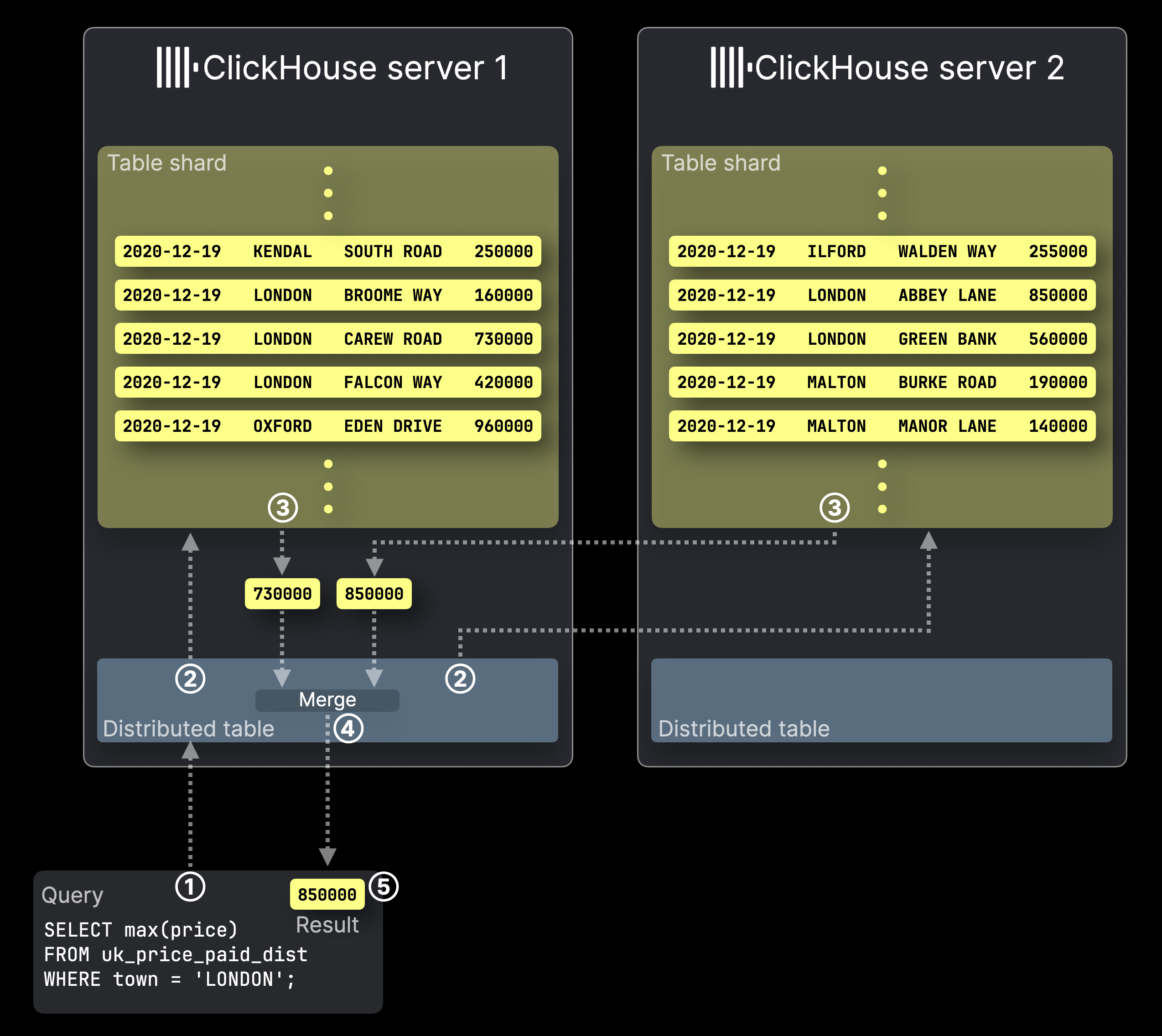

This diagram shows how SELECT queries are processed with a distributed table in ClickHouse:

① A SELECT aggregation query targeting the distributed table is sent to corresponding ClickHouse server, either directly or via a load balancer.

② The Distributed table forwards the query to all servers hosting shards of the target table, where each ClickHouse server computes its local aggregation result in parallel.

Then, the ClickHouse server hosting the initially targeted distributed table ③ collects all local results, ④ merges them into the final global result, and ⑤ returns it to the query sender.

Where to find more information

For more details beyond this high-level introduction to table shards, check out our deployment and scaling guide.

We also highly recommend this tutorial video for a deeper dive into ClickHouse shards: